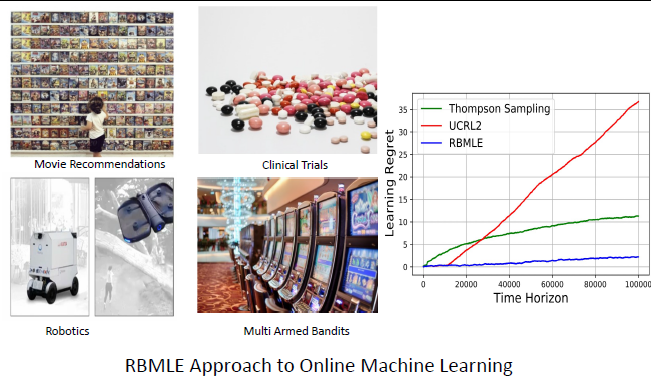

Reward Biased Maximum Likelihood Estimate Approach to Online Machine Learning

We revisit the Reward Biased Maximum Likelihood Estimate (RBMLE) algorithm that was proposed in (Kumar and Becker, 1982) to solve the problem of adaptive control (Richard Bellman 1961, Feldbaum, 1960a). It solved the “exploration vs. exploitation problem” by employing a bias in favor of parameters with larger optimal rewards, thereby introducing the principle of optimism in the face of uncertainty.

Modern attention is focused on the much finer notion of “learning regret” (Lai and Robbins, 1985). We show that RBMLE has a regret of O(logT) over a time horizon of T steps, similar to state-of-the-art algorithms. Simulation studies show that RBMLE outperforms other algorithms such as UCRL2 and Thompson Sampling.

References:

Akshay Mete, Rahul Singh and P. R. Kumar, “Reward Biased Maximum Likelihood Estimation for Reinforcement Learning”, Learning for Dynamics & Control, 2021

Faculty: Rahul Singh, ECE