A Vapnik’s Imperative to Unsupervised Domain Adaptation

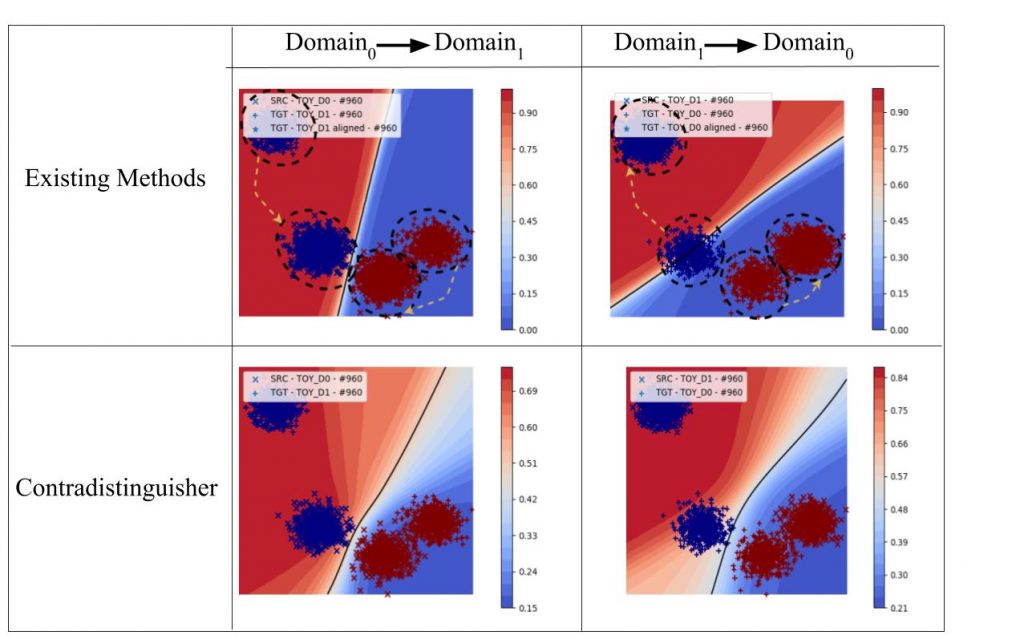

The success of deep neural networks, in the advancement of AI, can be attributed to models trained on large amounts of labeled data. Acquiring massive amounts of labeled data in some domains can be very expensive or not possible at all. To address this one can recourse to cross-domain learning. In our work, by following Vapnik’s imperative of statistical learning, we propose a direct approach to domain adaptation called Contradistinguisher. With this technique, we set benchmark results in almost all available datasets that are studied in the literature.

References:

S. Balgi and A. Dukkipati. Contradistinguisher: A Vapnik’s Imperative to Unsupervised Domain Adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence: 2021

Website: https://www.csa.iisc.ac.in/~ambedkar/

Faculty: Ambedkar Dukkipati, CSA